Inference

Once Compressa is set up, the first step to start using a model is to deploy it for inference.

The next instruction provide an example how to:

- Add model to Compressa Platform

- Deploy model for inference

- Use model via Compressa Chat

- Use model via REST API

Add model to the platform

To list all available models you can call:

GET /v1/models/

For example:

curl -X 'GET' \

'http://localhost:8080/api/v1/models/' \

-H 'accept: application/json'

The best models prepared by Compressa Team are already available in the tool.

If the model is not yet added you should call POST /v1/models/add/ with Hugging Face model's id as parameter.

For example:

curl -X 'POST' \

'http://localhost:8080/api/v1/models/add/?model_id=openchat%2Fopenchat-3.5-0106' \

-H 'accept: application/json' \

-d ''

As a response, you'll get a job_id which status you can track via GET /v1/jobs/{job_id}/status/

endpoint.

Full documentation for working with jobs can be found at Management API documentation page.

Deploy

URL: http://localhost:8080/api/

Existing model can be deployed by using the endpoint POST /v1/deploy/

You can deploy together the base model and up to 20 adapters created from the same base model.

For example you can deploy model openchat's Mistral openchat/openchat-3.5-0106

together with the custom adapter openchat-ru-adapter:

curl -X 'POST' \

'http://localhost:8080/api/v1/deploy/' \

-H 'accept: application/json' \

-H 'Content-Type: application/json' \

-d '{

"model_id": "model_id1",

"adapter_ids": [

"openchat/openchat-3.5-0106",

"openchat-ru-adapter"

]

}'

The model will be deployed and available for usage soon.

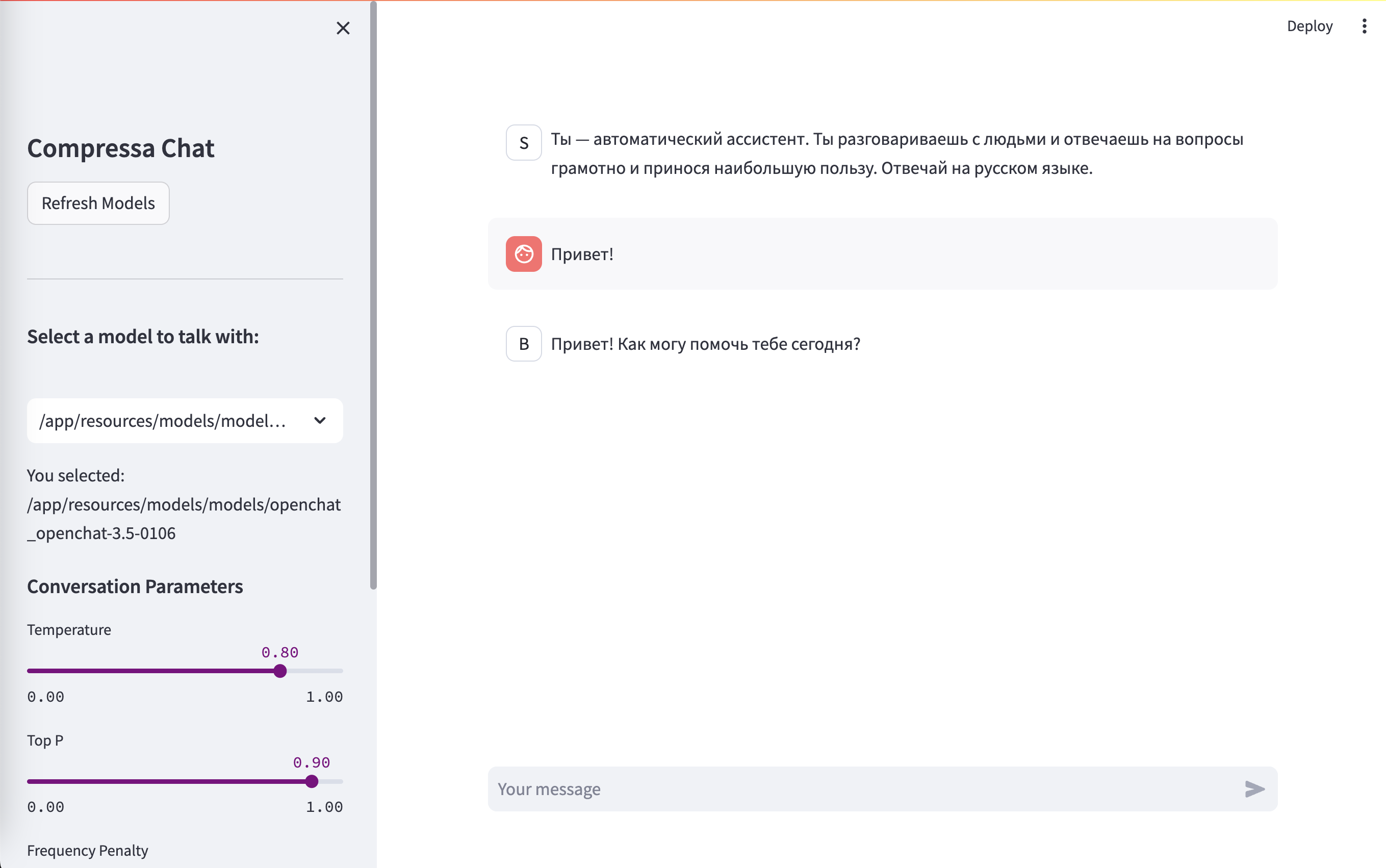

Chat UI

URL: http://localhost:8080/chat/

The Compressa Chat interface allows interaction with the deployed model.

You can select the base model of one of deployed adapters in the left panel. The conversation parameters can be configured from the same place.

REST API

URL: http://localhost:5000

Compressa provide REST API to directly interact with the deployed model.

The API implements OpenAI’s Completions

and Chat Completion API.